Facebook has started letting its partners fact-check photographs and videos beyond news articles, and proactively reviewing stories, TechCrunch reported.

Facebook has started letting its partners fact-check photographs and videos beyond news articles, and proactively reviewing stories, TechCrunch reported.

The social media giant is also preemptively blocking the creation of millions of fake accounts per day, Facebook revealed this news on a conference call with journalists and later in a blog post.

"When you tease apart the overall digital misinformation problem, you find multiple types of bad content and many bad actors with different motivations. It is important to match the right approach to these various challenges. And that requires not just careful analysis of what has happened. We also have to have the most up to date intelligence to understand completely new types of misinformation," Chief Security Officer Alex Stamos said.

According to Stamos, the term fake news is used to describe a lot of different types of activity that Facebook says it would like to prevent. "When we study these issues, we have to first define what is actually “fake.” The most common issues are fake identities, fake audiences, false facts and false narratives," he added.

Stamos added that there are two main reasons for fake news to spread.

The most common motivation for organized, professional groups is money, Stamos adds. "The majority of misinformation we have found, by both quantity and reach, has been created by groups who gain financially by driving traffic to sites they own. When we’re fighting financially motivated actors, our goal is to increase the cost of their operations while driving down their profitability. This is not wholly unlike how we have countered various types of spammers in the past.

"The second class of organized actors are the ones who are looking to artificially influence public debate. These cover the spectrum from private but ideologically motivated groups to full-time employees of state intelligence services. Their targets might be foreign or domestic, and while much of the public discussion has been about countries trying to influence the debate abroad, we also must be on guard for domestic manipulation using some of the same techniques," he added.

Facebook says that for each country they operate in and for each election they would work to support will be handled differently. "We are looking ahead, by studying each upcoming election and working with external experts to understand the actors involved and the specific risks in each country. We are then using this process to guide how we build and train teams with the appropriate local language and cultural skills. "Rather than wait for reports from our community, we now proactively look for potentially harmful types of election-related activity, such as Pages of foreign origin that are distributing inauthentic civic content. If we find any, we then send these suspicious accounts to be manually reviewed by our security team to see if they violate our Community Standards or our Terms of Service," said Samidh Chakrabarti, Product Manager at Facebook.

![submenu-img]() BMW i5 M60 xDrive launched in India, all-electric sedan priced at Rs 11950000

BMW i5 M60 xDrive launched in India, all-electric sedan priced at Rs 11950000![submenu-img]() This superstar was arrested several times by age 17, thrown out of home, once had just Rs 250, now worth Rs 6600 crore

This superstar was arrested several times by age 17, thrown out of home, once had just Rs 250, now worth Rs 6600 crore![submenu-img]() Meet Reliance’s highest paid employee, gets over Rs 240000000 salary, he is Mukesh Ambani’s…

Meet Reliance’s highest paid employee, gets over Rs 240000000 salary, he is Mukesh Ambani’s… ![submenu-img]() Meet lesser-known relative of Mukesh Ambani, Anil Ambani, has worked with BCCI, he is married to...

Meet lesser-known relative of Mukesh Ambani, Anil Ambani, has worked with BCCI, he is married to...![submenu-img]() Made in just Rs 95,000, this film was a superhit, but destroyed lead actress' career, saw controversy over bold scenes

Made in just Rs 95,000, this film was a superhit, but destroyed lead actress' career, saw controversy over bold scenes![submenu-img]() DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'

DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'![submenu-img]() DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message

DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message![submenu-img]() DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here

DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here![submenu-img]() DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar

DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar![submenu-img]() DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth

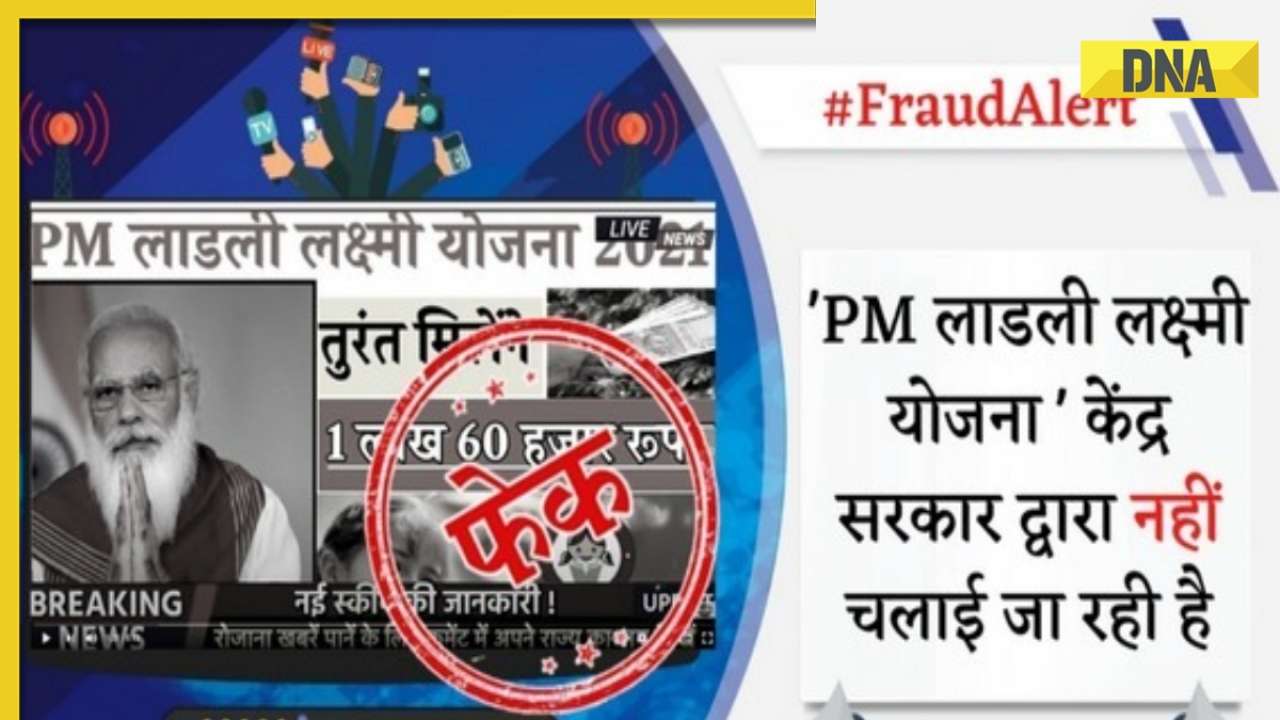

DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth![submenu-img]() In pics: Arti Singh stuns in red lehenga as she ties the knot with beau Dipak Chauhan in dreamy wedding

In pics: Arti Singh stuns in red lehenga as she ties the knot with beau Dipak Chauhan in dreamy wedding![submenu-img]() Actors who died due to cosmetic surgeries

Actors who died due to cosmetic surgeries![submenu-img]() See inside pics: Malayalam star Aparna Das' dreamy wedding with Manjummel Boys actor Deepak Parambol

See inside pics: Malayalam star Aparna Das' dreamy wedding with Manjummel Boys actor Deepak Parambol ![submenu-img]() In pics: Salman Khan, Alia Bhatt, Rekha, Neetu Kapoor attend grand premiere of Sanjay Leela Bhansali's Heeramandi

In pics: Salman Khan, Alia Bhatt, Rekha, Neetu Kapoor attend grand premiere of Sanjay Leela Bhansali's Heeramandi![submenu-img]() Streaming This Week: Crakk, Tillu Square, Ranneeti, Dil Dosti Dilemma, latest OTT releases to binge-watch

Streaming This Week: Crakk, Tillu Square, Ranneeti, Dil Dosti Dilemma, latest OTT releases to binge-watch![submenu-img]() What is inheritance tax?

What is inheritance tax?![submenu-img]() DNA Explainer: What is cloud seeding which is blamed for wreaking havoc in Dubai?

DNA Explainer: What is cloud seeding which is blamed for wreaking havoc in Dubai?![submenu-img]() DNA Explainer: What is Israel's Arrow-3 defence system used to intercept Iran's missile attack?

DNA Explainer: What is Israel's Arrow-3 defence system used to intercept Iran's missile attack?![submenu-img]() DNA Explainer: How Iranian projectiles failed to breach iron-clad Israeli air defence

DNA Explainer: How Iranian projectiles failed to breach iron-clad Israeli air defence![submenu-img]() DNA Explainer: What is India's stand amid Iran-Israel conflict?

DNA Explainer: What is India's stand amid Iran-Israel conflict?![submenu-img]() This superstar was arrested several times by age 17, thrown out of home, once had just Rs 250, now worth Rs 6600 crore

This superstar was arrested several times by age 17, thrown out of home, once had just Rs 250, now worth Rs 6600 crore![submenu-img]() Made in just Rs 95,000, this film was a superhit, but destroyed lead actress' career, saw controversy over bold scenes

Made in just Rs 95,000, this film was a superhit, but destroyed lead actress' career, saw controversy over bold scenes![submenu-img]() Meet 72-year-old who earns Rs 280 cr per film, Asia's highest-paid actor, bigger than Shah Rukh, Salman, Akshay, Prabhas

Meet 72-year-old who earns Rs 280 cr per film, Asia's highest-paid actor, bigger than Shah Rukh, Salman, Akshay, Prabhas![submenu-img]() This star, who once lived in chawl, worked as tailor, later gave four Rs 200-crore films; he's now worth...

This star, who once lived in chawl, worked as tailor, later gave four Rs 200-crore films; he's now worth...![submenu-img]() Tamil star Prasanna reveals why he chose series Ranneeti for Hindi debut: 'Getting into Bollywood is not...'

Tamil star Prasanna reveals why he chose series Ranneeti for Hindi debut: 'Getting into Bollywood is not...'![submenu-img]() IPL 2024: Virat Kohli, Rajat Patidar fifties and disciplined bowling help RCB beat Sunrisers Hyderabad by 35 runs

IPL 2024: Virat Kohli, Rajat Patidar fifties and disciplined bowling help RCB beat Sunrisers Hyderabad by 35 runs![submenu-img]() 'This is the problem in India...': Wasim Akram's blunt take on fans booing Mumbai Indians skipper Hardik Pandya

'This is the problem in India...': Wasim Akram's blunt take on fans booing Mumbai Indians skipper Hardik Pandya![submenu-img]() KKR vs PBKS, IPL 2024: Predicted playing XI, live streaming details, weather and pitch report

KKR vs PBKS, IPL 2024: Predicted playing XI, live streaming details, weather and pitch report![submenu-img]() KKR vs PBKS IPL 2024 Dream11 prediction: Fantasy cricket tips for Kolkata Knight Riders vs Punjab Kings

KKR vs PBKS IPL 2024 Dream11 prediction: Fantasy cricket tips for Kolkata Knight Riders vs Punjab Kings![submenu-img]() IPL 2024: KKR star Rinku Singh finally gets another bat from Virat Kohli after breaking previous one - Watch

IPL 2024: KKR star Rinku Singh finally gets another bat from Virat Kohli after breaking previous one - Watch![submenu-img]() Viral video: Teacher's cute way to capture happy student faces melts internet, watch

Viral video: Teacher's cute way to capture happy student faces melts internet, watch![submenu-img]() Woman attends online meeting on scooter while stuck in traffic, video goes viral

Woman attends online meeting on scooter while stuck in traffic, video goes viral![submenu-img]() Viral video: Pilot proposes to flight attendant girlfriend before takeoff, internet hearts it

Viral video: Pilot proposes to flight attendant girlfriend before takeoff, internet hearts it![submenu-img]() Pakistani teen receives life-saving heart transplant from Indian donor, details here

Pakistani teen receives life-saving heart transplant from Indian donor, details here![submenu-img]() Viral video: Truck driver's innovative solution to beat the heat impresses internet, watch

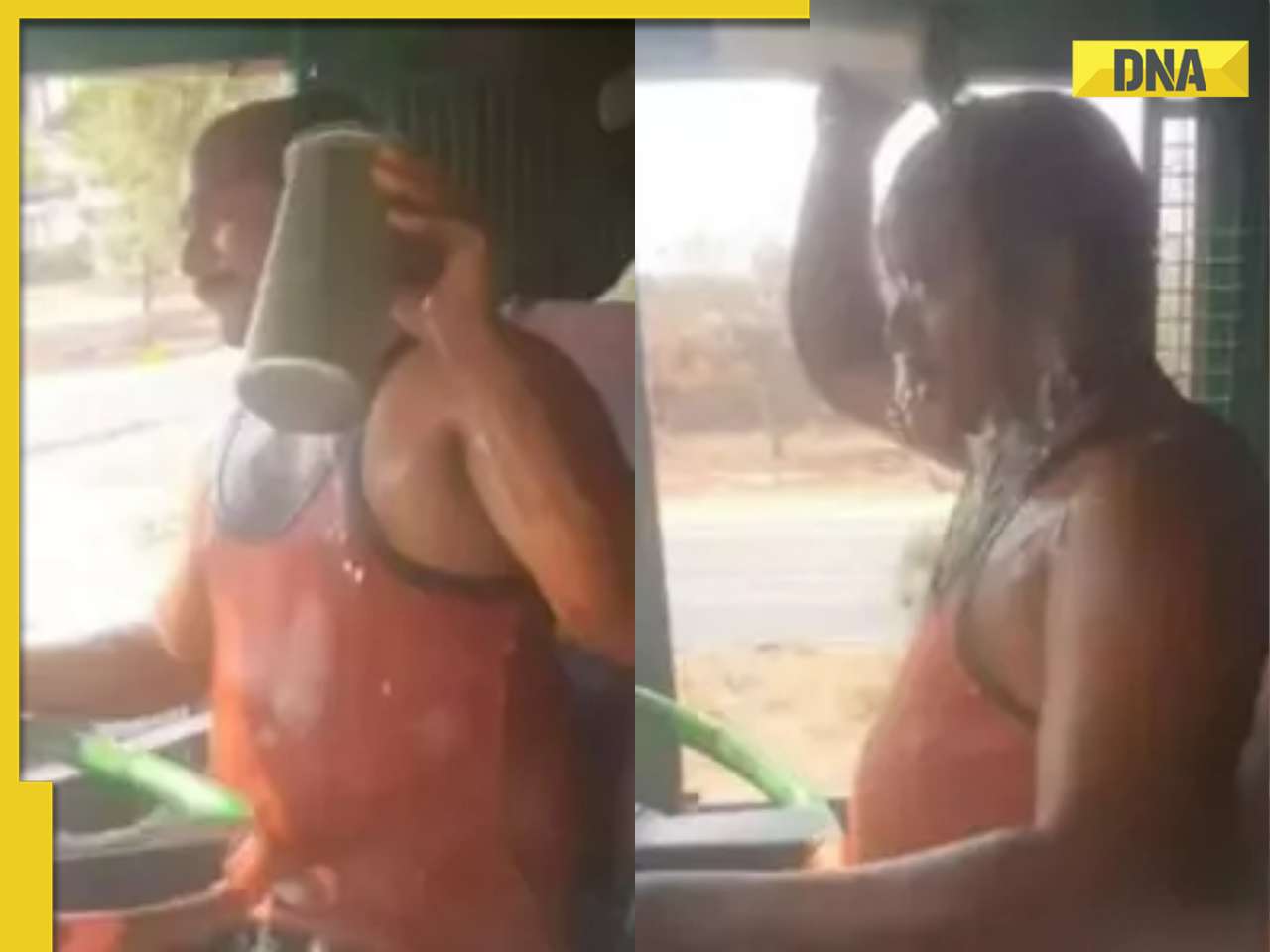

Viral video: Truck driver's innovative solution to beat the heat impresses internet, watch

)

)

)

)

)

)

)