Effects of Background Music on Objective and Subjective Performance Measures in an Auditory BCI

- 1Key Laboratory of Advanced Control and Optimization for Chemical Processes, Ministry of Education, East China University of Science and Technology, Shanghai, China

- 2Department of Cognitive Science, University of California San Diego, La Jolla, CA, USA

- 3Institute of Psychology, University of Würzburg, Würzburg, Germany

- 4Laboratory for Advanced Brain Signal Processing, Brain Science Institute, RIKEN, Wako-shi, Japan

- 5Skolkovo Institute of Science and Technology, Moscow, Russia

- 6Nicolaus Copernicus University (UMK), Torun, Poland

Several studies have explored brain computer interface (BCI) systems based on auditory stimuli, which could help patients with visual impairments. Usability and user satisfaction are important considerations in any BCI. Although background music can influence emotion and performance in other task environments, and many users may wish to listen to music while using a BCI, auditory, and other BCIs are typically studied without background music. Some work has explored the possibility of using polyphonic music in auditory BCI systems. However, this approach requires users with good musical skills, and has not been explored in online experiments. Our hypothesis was that an auditory BCI with background music would be preferred by subjects over a similar BCI without background music, without any difference in BCI performance. We introduce a simple paradigm (which does not require musical skill) using percussion instrument sound stimuli and background music, and evaluated it in both offline and online experiments. The result showed that subjects preferred the auditory BCI with background music. Different performance measures did not reveal any significant performance effect when comparing background music vs. no background. Since the addition of background music does not impair BCI performance but is preferred by users, auditory (and perhaps other) BCIs should consider including it. Our study also indicates that auditory BCIs can be effective even if the auditory channel is simultaneously otherwise engaged.

Introduction

Brain computer interface (BCI) technology has been used to help disabled patients communicate or control external devices through brain activity (Vidal, 1973; Kübler et al., 2001; Blankertz et al., 2010; Zhang et al., 2013a). Noninvasive BCI systems typically rely on the scalp-recorded electroencephalogram (EEG) (Wolpaw et al., 2002; Adeli et al., 2003; Sellers and Donchin, 2006; Allison et al., 2007; Jin et al., 2011a,b, 2012; Ortiz-Rosario and Adeli, 2013; Li et al., 2014; Zhang et al., 2016). Many BCIs require the user to perform specific voluntary tasks to produce distinct EEG patterns, such as paying attention to a visual, tactile, or auditory stimulus (Brouwer and Van Erp, 2010; Höhne et al., 2011; Jin et al., 2011a; Fazel-Rezai et al., 2012; Kaufmann et al., 2014; Cai et al., 2015). We refer to these three approaches as visual, tactile, and auditory BCIs, respectively.

Visual BCIs can yield high classification accuracy and information transfer rate (Kaufmann et al., 2011; Riccio et al., 2012; Jin et al., 2014, 2015; Zhang et al., 2014; Chen et al., 2015; Yin et al., 2016). However, these BCIs are not useful for patients who cannot see. Tactile BCIs have been validated with patients with visual disabilities, including persons with a disorder of consciousness (DOC) (Kaufmann et al., 2014; Edlinger et al., 2015; Li et al., 2016). Devices that can deliver the tactile stimuli used in modern tactile BCIs are less readily available and usable than the tools required for auditory BCIs. Most end users for BCIs do not have vibrotactile stimulators and experience using them, but do have headphones, laptops, cell phones, and/or other devices that can generate auditory stimuli that are adequate for modern auditory BCIs.

Several groups have shown that an auditory P300 BCI could serve as a communication channel for severely paralyzed patients, including persons diagnosed with DOC. Indeed, DOC patients could also benefit from BCI technology to assess cognitive function (Risetti et al., 2013; Lesenfants et al., 2016; Käthner et al., 2013; Edlinger et al., 2015; Ortner et al., accepted). Since many DOC patients cannot see, and have very limited means for communication and control, they have a particular need for improved auditory BCIs.

Auditory BCI systems require users to concentrate on a target sound, such as a tone, chime, or a word (Kübler et al., 2001, 2009; Hill et al., 2004; Vidaurre and Blankertz, 2010; Kaufmann et al., 2013; Treder et al., 2014). Auditory BCIs entail some different challenges from visual BCIs. Compared to vision, sound perception is relatively information-poor (Kang, 2006). Concordantly, the event-related potentials evoked in auditory BCI systems may lead to less effective discrimination between attended and unattended stimuli (Belitski et al., 2011; Chang et al., 2013). To improve the performance of auditory P300 BCI systems, many studies focused on enhancing the difference between attended and ignored events, which could produce more recognizable differences in the P300 and/or other components (Hill et al., 2004; Furdea et al., 2009; Guo et al., 2010; Halder et al., 2010; Nambu et al., 2013; Höhne and Tangermann, 2014). These efforts have made progress, but also show the ongoing challenge of identifying the best conditions for an auditory BCI.

Some work has explored BCIs to control music players and similar systems to improve quality of life. Music could affect users' emotions (Kang, 2006; Lin et al., 2014), which could make BCI users feel comfortable during BCI use. Tseng et al. (2015) developed a system to select music for users based on their mental state (Tseng et al., 2015). Treder et al. (2014) explored a multi-streamed musical oddball paradigm as an approach to BCIs (Treder et al., 2014). Their article presents a sound justification for this paradigm: “In Western societies, the skills involved in music listening and partly, music understanding are typically overlearnt.”

This paper introduces a simple auditory BCI system that includes background music, which we validated through offline and online experiments. This BCI system does not require musical training or expertise. Percussion sounds from cymbals, snare drums, and tom tom drums were used as stimuli and presented over headphones. We chose percussion stimuli because they are easy to recognize, and easy distinguish from each other and background piano music. We hypothesized that subjects would prefer background music, and would not perform worse while background music is playing. Hence, we explored the effect of background music on auditory BCI performance and users' subjective experience, evaluated via surveys. If successful, our approach would render auditory BCIs more ecologically valid.

Methods and Materials

Subjects and Stimuli

Sixteen healthy right handed subjects (8 male, 8 female, aged 21–27 years, mean age 24.8 ± 1.5) participated in this study. Nine of the subjects had prior experience with an auditory BCI. All subjects' native language was Mandarin Chinese. Each subject participated in one session within 1 day. The order of the conditions was counterbalanced across subjects for each session.

All subjects signed a written consent form prior to this experiment and were paid 50 RMB for their participation in each session. The local ethics committee approved the consent form and experimental procedure before any of the subjects participated.

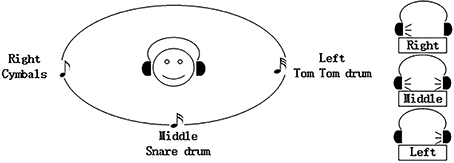

Three percussion sounds (cymbals, snare drums, and tom tom drums) were used as stimuli. The “cymbals” stimulus was played in the right headphone, the “snare drum” stimulus was played through both headphones to sound as if it came from the middle, and the “tom tom drum” stimulus was played in the left headphone (see Figure 1). For each subject, we confirmed the subject could hear the stimulus clearly.

Experimental Set Up, Offline, and Online Protocols

EEG signals were recorded with a g.HIamp and a g.EEGcap (Guger Technologies, Graz, Austria) with active electrodes, sampled at 1200 Hz and band pass filtered between 0.1 and 100 Hz. g.HIamp uses wide-range DC-coupled amplifier technology in combination with 24-bit sampling. The result is an input voltage of ±250 mV with a resolution of < 60 nV. The impedance of the electrodes was less than 30 kΩ. Data were recorded and analyzed using the BCI platform software package developed through the East China University of Science and Technology. We recorded from 30 EEG electrode positions based on the extended international 10–20 system (see Figure 2). Active electrodes were referenced to the nose, using a front electrode (FPz) as ground. The recorded data was filtered using a high pass of 0.1 Hz, a low pass of 30 Hz, notch-filtered at 50 Hz for analysis and classification (Käthner et al., 2013). A prestimulus interval of 100 ms was used for baseline correction of single trials.

This study compared two conditions: with no background music (WNB) and with background music (WB). The latter condition used piano music as the background. The piano music was titled “Confession” from Falcom Sound Team jdk. Each subject participated in offline and online sessions for both conditions within the same recording session.

In the offline session, the order of the two conditions was decided pseudorandomly. Each subject completed fifteen runs of one condition, then fifteen runs of the other condition, with a 2 min break after every five runs. Each run contained twelve trials that each consisted of one presentation of each of the three auditory stimuli. At the beginning of each run, an auditory cue in Chinese told the subject which stimulus to count during the upcoming run. The first auditory stimulus began 2 s after the trial began. The stimulus “on” time was 200 ms and the stimulus “off” time was always 100 ms, yielding an SOA of 300 ms. The three auditory stimuli were randomly distributed between stimulus type and corresponding location (see Figure 1), with the constraint that the same stimuli did not occur twice in succession. The target to target interval (TTI) was at least 600 ms. There was a 4 s break at the end of each run, and no feedback was provided. Thus, the offline session took a little over 15 min (0.3 s × three stimuli × twelve trials × fifteen runs × two conditions + a two min break × five times). Subjects had a 5 min break after the offline session.

The online session presented the two conditions in the same order as the offline session. However, there were 24 runs per condition, the number of trials per run was selected adaptively, and subjects received feedback at the end of each run (Jin et al., 2011a). This “adaptive classifier” means that the system would end the run and present feedback if the classifier chose the same output on two consecutive trials. Thus, the minimum number of trials per run was two. Each run still began with an auditory cue (in Chinese) to instruct the subject which target stimulus to count. At the end of the run, the target that the BCI system identified was presented to the subject via a human voice played through the target speaker (left, right, or front), as well as via the monitor. The time required for the online session varied because of the adaptive classifier.

Classification Scheme

The EEG was down-sampled by selecting every 30th sample from the EEG. The first 1000 ms of EEG after each stimulus presentation was used for feature extraction. Spatial-Temporal Discriminant Analysis (STDA) was used for classification (Zhang et al., 2013b). Data acquired offline were used to train the STDA classifier model. This model was then used in the online BCI system. STDA has exhibited superior ERP classification performance relative to competing algorithms (Hoffmann et al., 2008).

Subjective Report

After completing the last run of each session, each subject was asked two questions about each condition. Each question could be answered on a 1–5 rating scale indicating strong disagreement, moderate disagreement, neutrality, moderate agreement, or strong agreement. Subjects were also allowed to answer with intermediate replies (i.e., 1.5, 2.5, 3.5, and 4.5), thus allowing nine possible responses to each question. All questions were asked in Chinese. The two questions were:

(1) Did you prefer this condition when you were doing the auditory task?

(2) Did this condition make you tired?

Statistical Analysis

Before statistically comparing classification accuracy, the “outputs per minute” and “correct outputs per minute” were statistically tested for normal distribution (One-Sample Kolmogorov Smirnov test) and sphericity (Mauchly's test). Consecutively, repeated measures ANOVAs or t-tests with stimulus type as factor were conducted. Post-hoc comparison was performed with Tuckey-Kramer tests. The alpha level was adjusted according to Bonferoni-Holm. Non-parametric Kendall tests were computed to statistically compare the questionnaire replies.

Results

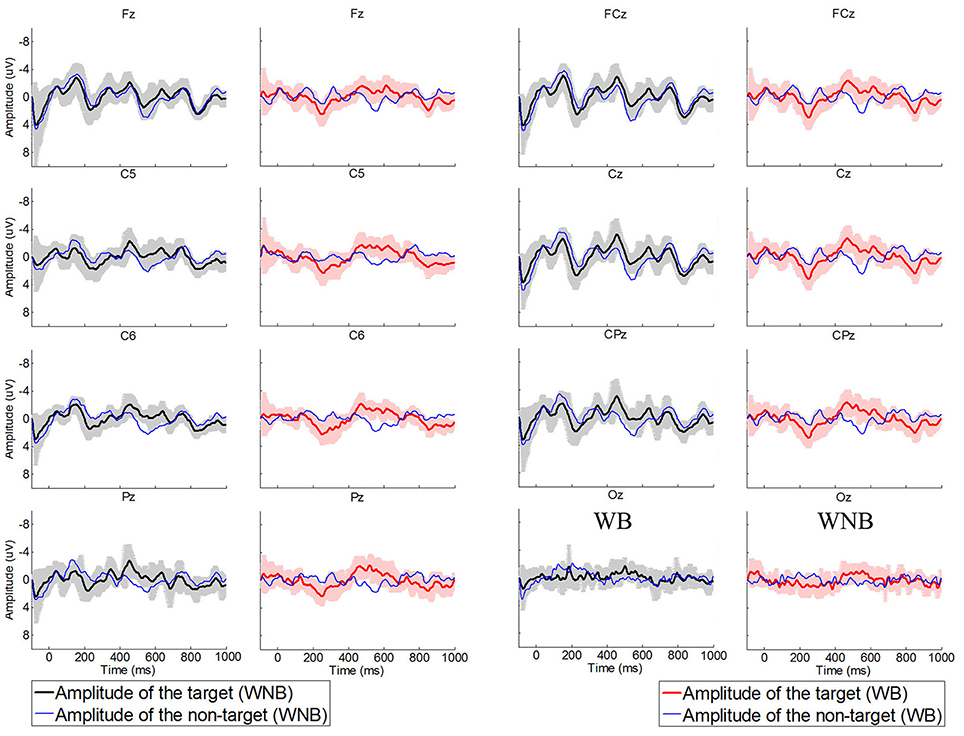

Figure 3 shows the averaged evoked potentials from the online data over sites Fz, FCz, C5, Cz, C6, CPz, Pz, and Oz. These potentials were averaged from subjects who obtained higher than 70% classification accuracy in all conditions. Figure 3 shows fairly weak negative potentials before 200 ms, and less distinct potentials in occipital areas.

Figure 3. Averaged evoked potentials of target trials and non-target trials in the high volume session for WNB and WB conditions.

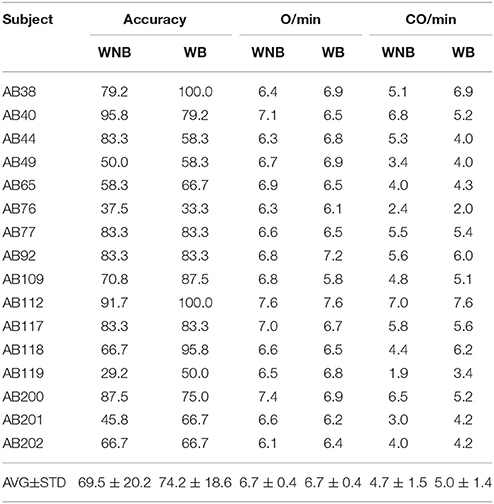

This study had two conditions: WNB and WB. Table 1 shows the online classification accuracy, “outputs per minute,” and “correct outputs per minute” for both conditions. The “outputs per minute” was defined as follows:

in which N is the “outputs per minute” and Na reflects the averaged trials in a run. The terms t1 and t2 denote the time required for a trial and the 4 s break between two runs, respectively.

The CN is the “correct outputs per minute,” and acc is the accuracy of each subject in the online experiment.

Table 1. Online classification accuracy, “outputs per minute” (O/min) and “correct outputs per minute” (CO/min).

Paired samples t-tests were used to show the differences between the WNB and WB conditions. There were no significant differences between the WNB and WB conditions in classification accuracy [t(1, 15) = −1.2, p > 0.05], in “output characters” per minute [t(1, 15) = 0.8, p > 0.05] and in “correct outputs” per minute [t(1, 15) = −0.9, p > 0.05]. This result suggests that background music did not affect performace.

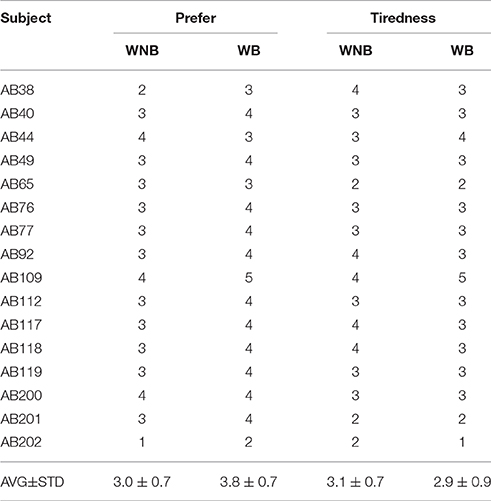

Table 2 presents the subjects' replies to questionnaires about the WNB and WB conditions. Non-parametric Kendall tests were used to explore these differences. Results showed a significant preference for background music (p < 0.05). Only one subject (AB44) showed a preference for the WNB condition. AB44 also verbally reported that he felt that the background music affected his task performance. There was no significant difference between the WNB and WB conditions in tiredness (p > 0.05).

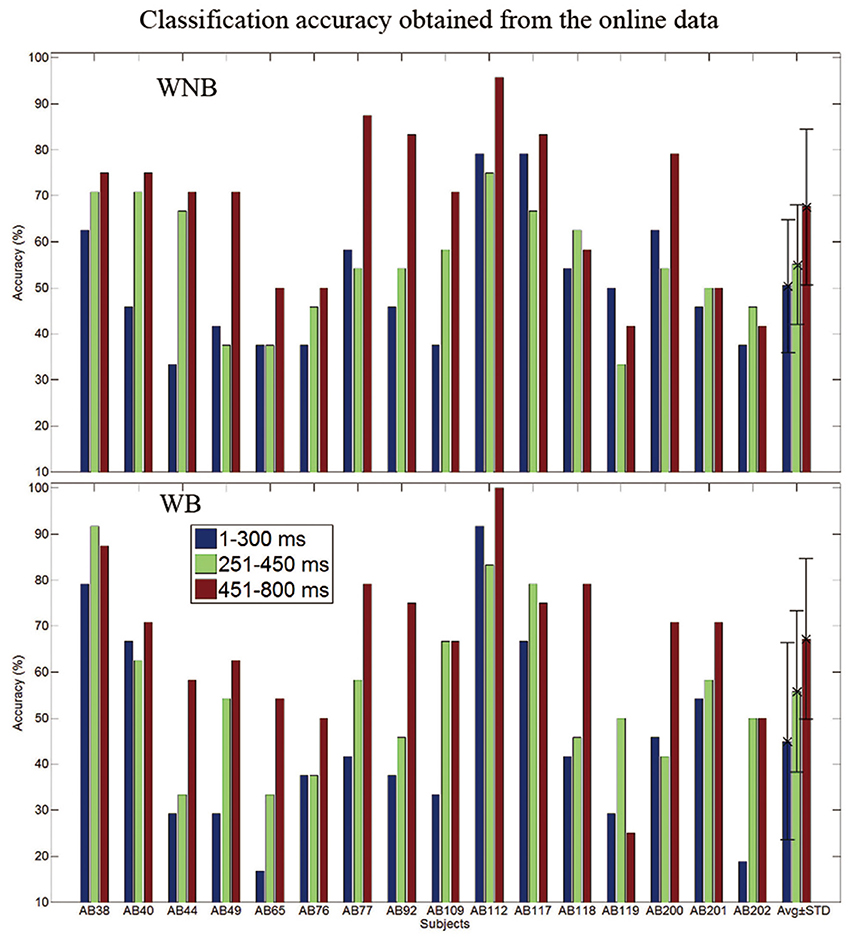

Figure 4 shows the contributions of ERPs between 1 and 300 ms, between 251 and 450 ms and between 451 and 800 ms for classification performance across subjects. The independent variables were the three time windows, and the dependent variable was the classification accuracy. Figure 4 shows that ERPs between 1 and 300 ms did not contribute strongly to classification, unlike the P300 potential between 250 and 450 ms. Figure 4 also shows that negative ERPs that were predominant between 451 and 800 ms. A two-way repeated measures ANOVA was used to show the classification accuracies based on these time windows [F(2, 30), p < 0.016]. Potentials between 451 and 800 ms yielded significantly higher classification accuracy than the ERPs between 251 and 450 ms (p < 0.016) and the ERPs between 1 and 300 ms (p < 0.016), and the potentials between 251 and 450 ms obtained significantly higher classification accuracy compared to the ERPs between 1 and 300 ms (p < 0.016). A one-way repeated measures ANOVA was used to test the contributions to classification accuracy among ERPs in different time windows for the WNB condition [F(2, 30) = 14.1, p < 0.016] and WB condition [F(2, 30) = 19.8, p < 0.016) respectively. The result showed that the potentials between 451 and 800 ms obtained significantly higher classification accuracy compared to the ERPs between 1 and 300 ms (p < 0.016) and the ERPs between 251 and 450 ms (p < 0.016), except for the WB pattern. The potentials between 451 and 800 ms did not obtain significantly higher classification accuracy compared to the ERPs between 251 and 450 ms (p = 0.029) in WB condition.

Figure 4. The contributions of the evoked potentials between 1 and 300 ms, between 251 and 450 ms and between 451 and 800 ms to BCI classification performance, across subjects.

Discussion

Effects of Background Music

The main goal of this study was to assess the effects of background music on the performance and user preferences on an auditory BCI. Results showed that the subjects preferred the WB condition over the WNB condition (see Table 2). There were no significant differences in classification accuracy between these two conditions. These results indicate that background music could make auditory BCI users more comfortable without impairing classification accuracy (see Figure 4, Tables 1, 2).

The classification accuracy and information transfer rate in WB condition in the present study were at least comparable to related work. For example, Halder and colleagues presented results with a three stimulus auditory-based BCI (Halder et al., 2010) and discussed training with an auditory-based BCI (Halder et al., 2016). The information transfer rate of the three stimuli BCI was lower than present study (the best of them was 1.7 bit/min). Käthner and colleagues reported that the average accuracy of their auditory-based BCI is only 66% and the SD is 24.8 (Käthner et al., 2013). Some other studies also reported that the average accuracies of their auditory-based BCI were about 70% (Schreuder et al., 2009; Belitski et al., 2011; De Vos et al., 2014). Compared to these studies, the accuracy and information transfer rate in the present study were very common for auditory-based P300 BCI.

Trial-to-Trial Interval (TTI) and Number of Stimuli

This study used a fairly long SOA (300 ms) because our paradigm only used three stimuli. Although we avoided successive repetition of the same stimulus in the same position, shorter TTIs could have made it difficult for subjects to distinguish the different stimuli and reduced P300 amplitude (Gonsalvez and Polich, 2002).

Most P300 BCIs use more than three stimuli. Adding more stimuli and making them more distinct could make a shorter SOA feasible. For example, Höhne and Tangermann (2014) used an 83.3 ms ISI with 26 stimuli, with a duration of 200–250 ms (Höhne and Tangermann, 2014). In their study, the target stimulus was presented after 6–10 non-target stimuli, and the TTI was at least 600 ms, which should be enough for the subjects to detect the target stimulus.

ERPs and Relative Contributions to Classification Accuracy

Figure 4 showed that ERPs before 300 ms contributed to classification accuracy less than ERPs from the other two time windows that we analyzed. Some auditory studies reported that their paradigms could evoke clear mismatch negative potentials (MMNs) (Hill et al., 2004; Kanoh et al., 2008; Brandmeyer et al., 2013). However, there were no clear negative potentials from target trials around 200 ms, due to differences in our stimuli and task instructions. Several factors can affect the MMN, including modality, stimulus parameters, target probability, sequence order, and task instructions (Näätänen et al., 1993; Pincze et al., 2002; Sculthorpe and Campbell, 2011; Kimura, 2012).

Figure 4 shows that the time window between 451 and 800 ms yielded significantly higher classification accuracy than the other time windows (1–300 ms and 251–450 ms) in most comparisons. Thus, late potentials (after 450 ms) contributed to classification accuracy more than early potentials (before 450 ms) in this study.

The evoked potentials were weak in occipital areas (see Figure 3). This result suggests that the BCI presented here might be practical with a reduced electrode montage that does not include occipital sites. Thus, a conventional electrode cap may not be necessary. Alternate means of mounting electrodes on the head (such as headphones) might reduce preparation time and cost while improving comfort and ease-of-use. This could be especially important in long-term use for patients with DOC or other severe movement disabilities, when occipital electrodes can become uncomfortable if the head is resting on a pillow or cushion.

Conclusion

The main goal of this paper was to explore the effects of adding background music to an auditory BCI approach that used three stimuli (Halder et al., 2010). Results showed that the users preferred background music to the canonical approach (no background music) without significant changes in BCI performance. While auditory BCIs have been validated in prior work (Hill et al., 2004; Kübler et al., 2009; Treder et al., 2014; Lesenfants et al., 2016; Ortner et al., accepted), this outcome suggests that future auditory, and perhaps other, BCIs could improve user satisfaction by incorporating background music. Further work is needed to explore issues such as: the best signal processing methods and classifiers; performance with target patients at different locations; improving performance with inefficient subjects; and different types of auditory stimuli and background music, including music chosen based on each subject's mental state (Tseng et al., 2015).

Author Contributions

SZ operated the experiment and analyzed the data. BA improved the paper in discussion and introduction. AK improved the experiment and the method and result part. AC offer help in algorithm. JJ offered the idea of this paper and wrote the paper. Dr. Yu zhang help in classification method. XW guided the experiment.

Funding

This work was supported in part by the Grant National Natural Science Foundation of China, under Grant Nos. 61573142, 61203127, 91420302, and 61305028. This work was also supported by the Fundamental Research Funds for the Central Universities (WG1414005, WH1314023, and WH1516018) and Shanghai Chenguang Program under Grant 14CG31.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Adeli, H., Zhou, Z., and Dadmehr, N. (2003). Analysis of EEG records in an epileptic patient using wavelet transform. J. Neurosci. Methods 123, 69–87. doi: 10.1016/S0165-0270(02)00340-0

Allison, B. Z., Wolpaw, E. W., and Wolpaw, J. R. (2007). Brain–computer interface systems: progress and prospects. Expert Rev. Med. Devices 4, 463–474. doi: 10.1586/17434440.4.4.463

Belitski, A., Farquhar, J., and Desain, P. (2011). P300 audio-visual speller. J. Neural Eng. 8:025022. doi: 10.1088/1741-2560/8/2/025022

Blankertz, B., Sannelli, C., Halder, S., Hammer, E. M., Kübler, A., Müller, K.-R., et al. (2010). Neurophysiological predictor of SMR-based BCI performance. Neuroimage 51, 1303–1309. doi: 10.1016/j.neuroimage.2010.03.022

Brandmeyer, A., Sadakata, M., Spyrou, L., McQueen, J. M., and Desain, P. (2013). Decoding of single-trial auditory mismatch responses for online perceptual monitoring and neurofeedback. Front. Neurosci. 7:265. doi: 10.3389/fnins.2013.00265

Brouwer, A.-M., and Van Erp, J. B. F. (2010). A tactile P300 brain-computer interface. Front. Neurosci. 4:19. doi: 10.3389/fnins.2010.00019

Cai, Z., Makino, S., and Rutkowski, T. M. (2015). Brain evoked potential latencies optimization for spatial auditory brain–computer interface. Cognit. Comput. 7, 34–43. doi: 10.1007/s12559-013-9228-x

Chang, M., Nishikawa, N., Struzik, Z. R., Mori, K., Makino, S., Mandic, D., et al. (2013). Comparison of P300 responses in auditory, visual and audiovisual spatial speller BCI paradigms. arXiv preprint arXiv:1301.6360. doi: 10.3217/978-3-85125-260-6-15

Chen, X., Wang, Y., Nakanishi, M., Gao, X., Jung, T.-P., and Gao, S. (2015). High-speed spelling with a noninvasive brain–computer interface. Proc. Natl Acad. Sci.U.S.A. 112, E6058–E6067. doi: 10.1073/pnas.1508080112

De Vos, M., Gandras, K., and Debener, S. (2014). Towards a truly mobile auditory brain–computer interface: exploring the P300 to take away. Int. J. Psychophysiol. 91, 46–53. doi: 10.1016/j.ijpsycho.2013.08.010

Edlinger, G., Allison, B. Z., and Guger, C. (2015). “How many people could use a BCI system?,” in Clinical Systems Neuroscience, eds K. Kansaku, L. Cohen, and N. Birbaumer (Tokyo: Springer Verlag), 33–66.

Fazel-Rezai, R., Allison, B. Z., Guger, C., Sellers, E. W., Kleih, S. C., and Kübler, A. (2012). P300 brain computer interface: current challenges and emerging trends. Front. Neuroeng. 5:14. doi: 10.3389/fneng.2012.00014

Furdea, A., Halder, S., Krusienski, D. J., Bross, D., Nijboer, F., Birbaumer, N., et al. (2009). An auditory oddball (P300) spelling system for brain-computer interfaces. Psychophysiology 46, 617–625. doi: 10.1111/j.1469-8986.2008.00783.x

Gonsalvez, C. J., and Polich, J. (2002). P300 amplitude is determined by target-to-target interval. Psychophysiology 39, 388–396. doi: 10.1017/S0048577201393137

Guo, J., Gao, S., and Hong, B. (2010). An auditory brain–computer interface using active mental response. IEEE Trans. Neural. Syst. Rehabil. Eng. 18, 230–235. doi: 10.1109/TNSRE.2010.2047604

Halder, S., Käthner, I., and Kübler, A. (2016). Training leads to increased auditory brain-computer interface performance of end-users with motor impairments. Clin. Neurophysiol. 127, 1288–1296. doi: 10.1016/j.clinph.2015.08.007

Halder, S., Rea, M., Andreoni, R., Nijboer, F., Hammer, E. M., Kleih, S. C., et al. (2010). An auditory oddball brain-computer interface for binary choices. Clin. Neurophysiol. 121, 516–523. doi: 10.1016/j.clinph.2009.11.087

Hill, N. J., Lal, T. N., Bierig, K., Birbaumer, N., and Schölkopf, B. (2004). “An auditory paradigm for brain-computer interfaces,” in: NIPS (Vancouver, BC), 569–576.

Hoffmann, U., Vesin, J.-M., Ebrahimi, T., and Diserens, K. (2008). An efficient P300-based brain–computer interface for disabled subjects. J. Neurosci. Methods 167, 115–125. doi: 10.1016/j.jneumeth.2007.03.005

Höhne, J., Schreuder, M., Blankertz, B., and Tangermann, M. (2011). A novel 9-class auditory ERP paradigm driving a predictive text entry system. Front. Neurosci. 5:99. doi: 10.3389/fnins.2011.00099

Höhne, J., and Tangermann, M. (2014). Towards user-friendly spelling with an auditory brain-computer interface: the charstreamer paradigm. PLoS ONE 9:e98322. doi: 10.1371/journal.pone.0098322

Jin, J., Allison, B. Z., Sellers, E. W., Brunner, C., Horki, P., Wang, X., et al. (2011a). An adaptive P300-based control system. J. Neural Eng. 8:036006. doi: 10.1088/1741-2560/8/3/036006

Jin, J., Allison, B. Z., Sellers, E. W., Brunner, C., Horki, P., Wang, X., et al. (2011b). Optimized stimulus presentation patterns for an event-related potential EEG-based brain–computer interface. Med. Biol. Eng. Comput. 49, 181–191. doi: 10.1007/s11517-010-0689-8

Jin, J., Daly, I., Zhang, Y., Wang, X., and Cichocki, A. (2014). An optimized ERP brain–computer interface based on facial expression changes. J. Neural Eng. 11:036004. doi: 10.1088/1741-2560/11/3/036004

Jin, J., Sellers, E. W., and Wang, X. (2012). Targeting an efficient target-to-target interval for P300 speller brain-computer interfaces. Med. Biol. Eng. Comput. 50, 289–296. doi: 10.1007/s11517-012-0868-x

Jin, J., Sellers, E. W., Zhou, S., Zhang, Y., Wang, X., and Cichocki, A. (2015). A P300 brain–computer interface based on a modification of the mismatch negativity paradigm. Int. J. Neural Syst. 25:1550011. doi: 10.1142/S0129065715500112

Kanoh, S. I., Miyamoto, K.-I., and Yoshinobu, T. (2008). “A brain-computer interface (BCI) system based on auditory stream segregation,” in 30th 2008 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Vancouver, BC), 642–645.

Käthner, I., Ruf, C. A., Pasqualotto, E., Braun, C., Birbaumer, N., and Halder, S. (2013). A portable auditory P300 brain–computer interface with directional cues. Clin. Neurophysiol. 124, 327–338. doi: 10.1016/j.clinph.2012.08.006

Kaufmann, T., Herweg, A., and Kübler, A. (2014). Toward brain-computer interface based wheelchair control utilizing tactually-evoked event-related potentials. J. Neuroeng. Rehabil. 11, 1. doi: 10.1186/1743-0003-11-7

Kaufmann, T., Schulz, S., Grünzinger, C., and Kübler, A. (2011). Flashing characters with famous faces improves ERP-based brain–computer interface performance. J. Neural Eng. 8:056016. doi: 10.1088/1741-2560/8/5/056016

Kaufmann, T., Schulz, S. M., Köblitz, A., Renner, G., Wessig, C., and Kübler, A. (2013). Face stimuli effectively prevent brain–computer interface inefficiency in patients with neurodegenerative disease. Clin. Neurophysiol. 124, 893–900. doi: 10.1016/j.clinph.2012.11.006

Kimura, M. (2012). Visual mismatch negativity and unintentional temporal-context-based prediction in vision. Int. J. Psychophysiol. 83, 144–155. doi: 10.1016/j.ijpsycho.2011.11.010

Kübler, A., Furdea, A., Halder, S., Hammer, E. M., Nijboer, F., and Kotchoubey, B. (2009). A brain–computer interface controlled auditory event-related potential (P300) spelling system for locked-in patients. Ann. N.Y. Acad. Sci. 1157, 90–100. doi: 10.1111/j.1749-6632.2008.04122.x

Kübler, A., Kotchoubey, B., Kaiser, J., Wolpaw, J. R., and Birbaumer, N. (2001). Brain–computer communication: unlocking the locked in. Psychol. Bull. 127:358. doi: 10.1037/0033-2909.127.3.358

Lesenfants, D., Chatelle, C., Saab, J., Laureys, S., and Noirhomme, Q. (2016). “Neurotechnology and Direct Brain Communication,” in New Insights and Responsibilities Concerning Speechless but Communicative Subjects, Vol. 85, eds M. Farisco and K. Evers, (London: Routledge).

Li, J., Ji, H., Cao, L., Zang, D., Gu, R., Xia, B., et al. (2014). Evaluation and application of a hybrid brain computer interface for real wheelchair parallel control with multi-degree of freedom. Int. J. Neural Syst. 24:1450014. doi: 10.1142/S0129065714500142

Li, Y., Pan, J., Long, J., and Yu, T. (2016). Multimodal BCIs: target detection, multidimensional control, and awareness evaluation in patients with disorder of consciousness. Proc. IEEE 104, 332–352. doi: 10.1109/JPROC.2015.2469106

Lin, Y. P., Yang, Y. H., and Jung, T. P. (2014). Fusion of electroencephalographic dynamics and musical contents for estimating emotional responses in music listening. Front. Neurosci. 8:94. doi: 10.3389/fnins.2014.00094

Näätänen, R., Paavilainen, P., Titinen, H., Jiang, D., and Alho, K. (1993). Attention and mismatch negativity. Psychophysiology 30, 436–450. doi: 10.1111/j.1469-8986.1993.tb02067.x

Nambu, I., Ebisawa, M., Kogure, M., Yano, S., Hokari, H., and Wada, Y. (2013). Estimating the intended sound direction of the user: toward an auditory brain-computer interface using out-of-head sound localization. PLoS ONE 8:e57174. doi: 10.1371/journal.pone.0057174

Ortiz-Rosario, A., and Adeli, H. (2013). Brain-computer interface technologies: from signal to action. Rev. Neurosci. 24, 537–552. doi: 10.1515/revneuro-2013-0032

Ortner, R., Allison, B. Z., Heilinger, A., Sabathiel, N., and Guger, C. (accepted). Assessment of and communication for persons with disorders of consciousness. J. Vis. Exp.

Pincze, Z., Lakatos, P., Rajkai, C., Ulbert, I., and Karmos, G. (2002). Effect of deviant probability and interstimulus/interdeviant interval on the auditory N1 and mismatch negativity in the cat auditory cortex. Cogn. Brain Res. 13, 249–253. doi: 10.1016/S0926-6410(01)00105-7

Riccio, A., Mattia, D., Simione, L., Olivetti, M., and Cincotti, F. (2012). Eye-gaze independent EEG-based brain–computer interfaces for communication. J. Neural Eng. 9:045001. doi: 10.1088/1741-2560/9/4/045001

Risetti, M., Formisano, R., Toppi, J., Quitadamo, L. R., Bianchi, L., Astolfi, L., et al. (2013). On ERPs detection in disorders of consciousness rehabilitation. Front. Hum. Neurosci. 7:775. doi: 10.3389/fnhum.2013.00775

Schreuder, M., Tangermann, M., and Blankertz, B. (2009). Initial results of a high-speed spatial auditory BCI. Int. J. Bioelectromagn. 11, 105–109. Available online at: http://www.ijbem.org/

Sculthorpe, L. D., and Campbell, K. B. (2011). Evidence that the mismatch negativity to pattern violations does not vary with deviant probability. Clin. Neurophysiol. 122, 2236–2245. doi: 10.1016/j.clinph.2011.04.018

Sellers, E. W., and Donchin, E. (2006). A P300-based brain–computer interface: initial tests by ALS patients. Clin. Neurophysiol. 117, 538–548. doi: 10.1016/j.clinph.2005.06.027

Treder, M. S., Purwins, H., Miklody, D., Sturm, I., and Blankertz, B. (2014). Decoding auditory attention to instruments in polyphonic music using single-trial EEG classification. J. Neural Eng. 11:026009. doi: 10.1088/1741-2560/11/2/026009

Tseng, K. C., Lin, B.-S., Wong, A. M.-K., and Lin, B.-S. (2015). Design of a mobile brain computer interface-based smart multimedia controller. Sensors 15, 5518–5530. doi: 10.3390/s150305518

Vidal, J.-J. (1973). Toward direct brain-computer communication. Annu. Rev. Biophys. Bioeng. 2, 157–180. doi: 10.1146/annurev.bb.02.060173.001105

Vidaurre, C., and Blankertz, B. (2010). Towards a cure for BCI illiteracy. Brain Topogr. 23, 194–198. doi: 10.1007/s10548-009-0121-6

Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G., and Vaughan, T. M. (2002). Brain–computer interfaces for communication and control. Clin. Neurophysiol. 113, 767–791. doi: 10.1016/S1388-2457(02)00057-3

Yin, E., Zeyl, T., Saab, R., Hu, D., Zhou, Z., and Chau, T. (2016). An Auditory-Tactile Visual Saccade-Independent P300 Brain–Computer Interface. Int. J. Neural Syst. 26:1650001. doi: 10.1142/S0129065716500015

Zhang, D., Song, H., Xu, R., Zhou, W., Ling, Z., and Hong, B. (2013a). Toward a minimally invasive brain–computer interface using a single subdural channel: a visual speller study. Neuroimage 71, 30–41. doi: 10.1016/j.neuroimage.2012.12.069

Zhang, Y., Wang, Y., Jin, J., and Wang, X. (2016). Sparse bayesian learning for obtaining sparsity of EEG frequency bands based feature vectors in motor imagery classification. Int. J. Neural Syst. doi: 10.1142/S0129065716500325. [Epub ahead of print].

Zhang, Y., Zhou, G., Jin, J., Wang, X., and Cichocki, A. (2014). Frequency recognition in SSVEP-based BCI using multiset canonical correlation analysis. Int. J. Neural Syst. 24:1450013. doi: 10.1142/S0129065714500130

Keywords: brain computer interface, event-related potentials, auditory, music background, audio stimulus

Citation: Zhou S, Allison BZ, Kübler A, Cichocki A, Wang X and Jin J (2016) Effects of Background Music on Objective and Subjective Performance Measures in an Auditory BCI. Front. Comput. Neurosci. 10:105. doi: 10.3389/fncom.2016.00105

Received: 24 March 2016; Accepted: 27 September 2016;

Published: 13 October 2016.

Edited by:

Pei-Ji Liang, Shanghai Jiao Tong University, ChinaReviewed by:

Marta Olivetti, Sapienza University of Rome, ItalyYong-Jie Li, University of Electronic Science and Technology of China, China

Copyright © 2016 Zhou, Allison, Kübler, Cichocki, Wang and Jin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jing Jin, jinjingat@gmail.com

Sijie Zhou1

Sijie Zhou1  Brendan Z. Allison

Brendan Z. Allison Andrea Kübler

Andrea Kübler Andrzej Cichocki

Andrzej Cichocki Xingyu Wang

Xingyu Wang Jing Jin

Jing Jin